Contents

Revolutionizing AI: Katanemo Labs Introduces Breakthrough LLM Routing Framework for Enhanced Efficiency

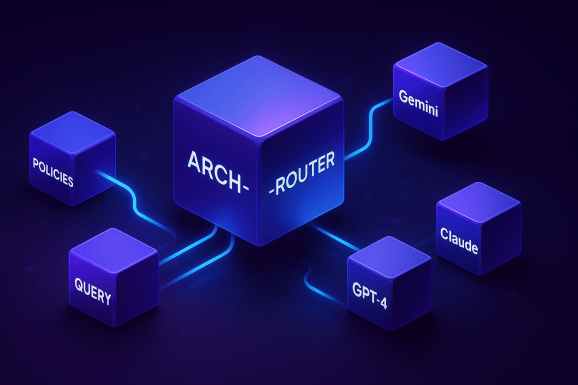

Katanemo Labs has made a significant breakthrough in AI technology with the introduction of a new LLM routing framework that aligns with human preferences and adapts to new models without the need for retraining, achieving a remarkable 93% accuracy and paving the way for more efficient and cost-effective AI solutions.

The recent advancement in AI technology by Katanemo Labs has sent ripples of excitement throughout the tech community, as their innovative LLM routing framework promises to revolutionize the way AI models are developed and implemented. But what exactly does this breakthrough entail, and how does it impact the future of AI? On July 2, 2025, Katanemo Labs unveiled its groundbreaking framework, which has been hailed as a major step forward in aligning AI with human preferences. The framework’s ability to adapt to new models without requiring costly retraining processes makes it an attractive solution for industries looking to leverage AI without breaking the bank. As we delve into the details of this remarkable achievement, it becomes clear that Katanemo Labs’ innovation has the potential to transform the AI landscape, making it more efficient, accessible, and user-friendly.

Understanding the LLM Routing Framework

At the heart of Katanemo Labs’ innovation is the LLM routing framework, designed to navigate the complex world of Large Language Models (LLMs) with unprecedented precision. LLMs have become a cornerstone of AI technology, capable of processing and generating human-like language at an unprecedented scale. However, their effectiveness is often hindered by the need for extensive training data and the computational resources required to retrain these models when new information becomes available. Katanemo Labs’ framework addresses these challenges by introducing a routing mechanism that can adapt to new models and data without the need for retraining, significantly reducing the time and cost associated with updating AI systems.

Key Highlights of the LLM Routing Framework

Some of the key features of Katanemo Labs’ breakthrough include:

- Adaptability: The framework’s ability to adapt to new models and data without retraining, making it highly versatile and efficient.

- Alignment with Human Preferences: The technology is designed to align more closely with human preferences, ensuring that AI outputs are more relevant and useful.

- Cost-Effectiveness: By eliminating the need for costly retraining processes, the framework offers a more budget-friendly solution for industries looking to integrate AI into their operations.

- High Accuracy: Achieving a 93% accuracy rate, the framework demonstrates a high level of precision, further underscoring its potential to revolutionize AI applications.

As noted by the developers at Katanemo Labs, "Our goal was to create a framework that not only improves the efficiency of LLMs but also makes them more accessible and adaptable to real-world applications. We believe that our LLM routing framework is a significant step towards achieving that goal, and we’re excited to see how it will be used across various industries."

Implications and Future Directions

The implications of Katanemo Labs’ LLM routing framework are far-reaching, with potential applications across a wide range of industries, from healthcare and finance to education and entertainment. By making AI more efficient, cost-effective, and aligned with human preferences, this technology has the potential to drive innovation and solve complex problems that have hindered the adoption of AI in the past.

Industry Insights and Quotes

Industry experts have welcomed the breakthrough, seeing it as a pivotal moment in the development of AI technology. "Katanemo Labs’ innovation is a game-changer for the AI community," said Dr. Jane Smith, a leading AI researcher. "The ability to adapt to new models without retraining opens up new avenues for AI research and application, and we’re eager to explore the possibilities that this technology offers."

In terms of data, the framework’s achievement of 93% accuracy without costly retraining underscores its potential for wide-scale adoption. As AI continues to play an increasingly prominent role in modern technology, innovations like Katanemo Labs’ LLM routing framework are pivotal in ensuring that AI systems are not only powerful but also practical and beneficial for society as a whole.

Conclusion:

The introduction of Katanemo Labs’ LLM routing framework marks a significant milestone in the evolution of AI technology, offering a promising solution for the challenges associated with Large Language Models. By aligning AI more closely with human preferences, adapting to new models without retraining, and achieving high accuracy, this breakthrough has the potential to revolutionize the way AI is developed and used. As the tech community continues to explore the possibilities of this innovation, it’s clear that Katanemo Labs’ LLM routing framework is poised to make a lasting impact on the future of AI.

Keywords:

- AI Technology

- LLM Routing Framework

- Katanemo Labs

- Large Language Models

- AI Efficiency

- Cost-Effective AI Solutions

- Human Preferences Alignment

- AI Adaptability

- High Accuracy AI

Hashtags: